Archive

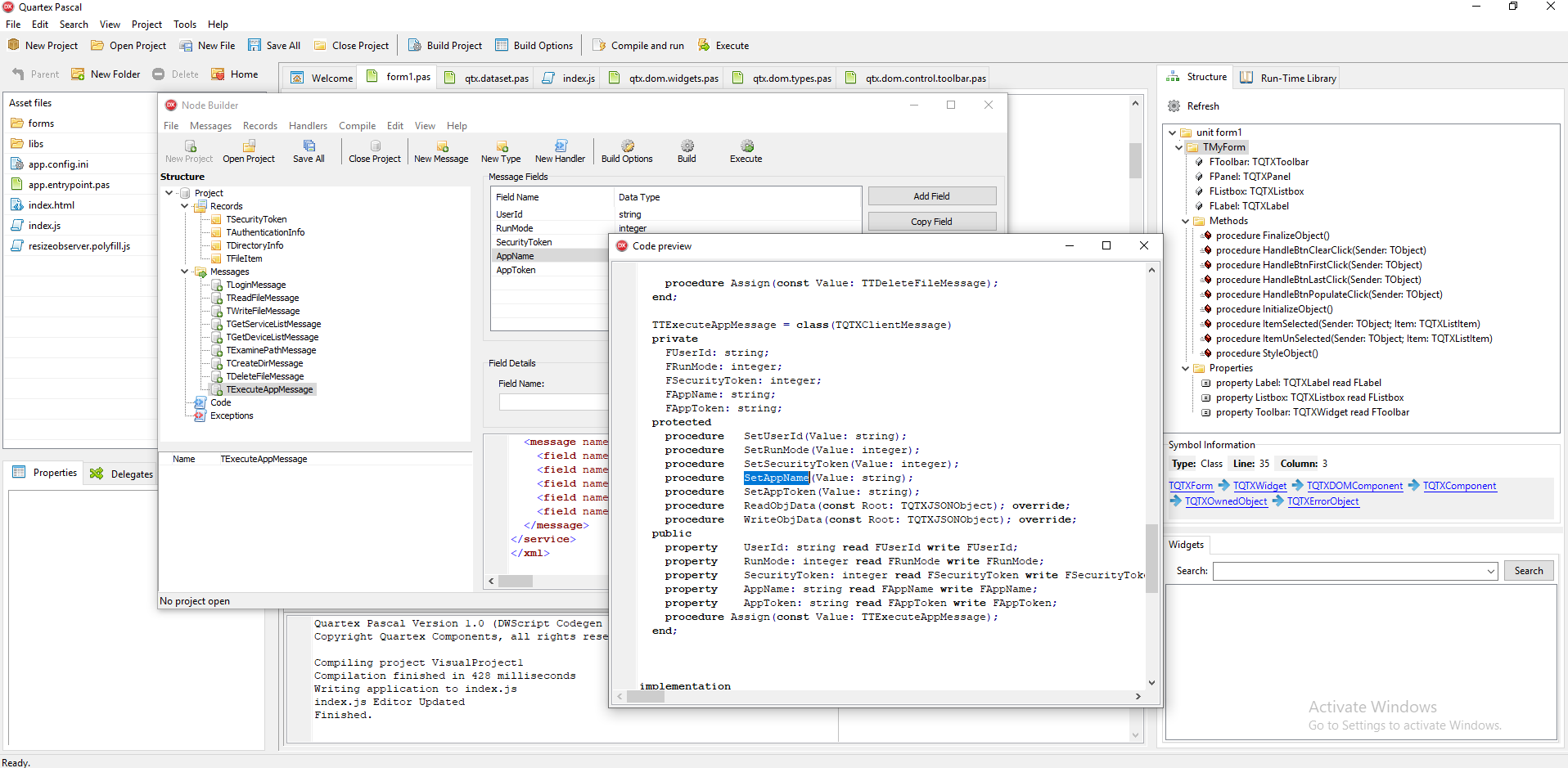

Quartex Pascal: Dynamic JS structures

If you work closely with JavaScript, it’s important to be able to approach JS in way that is both familiar to Object Pascal developers, yet compatible with the underlying reality of JavaScript. We want to do as much as possible in Object Pascal, but in order to achieve that – we first need to learn how to properly integrate with JavaScript without loss of fidelity and performance.

Object Pascal is strictly typed and linear in execution, which has a lot of benefits for a traditional developer. But JavaScript is the exact opposite and thrives on untyped data and async execution. It is only recently that concepts such as classes have appeared in JavaScript, and they have little in common with traditional C/C++ or Pascal classes.

In order to make JavaScript’s ad-hoc data structures endurable from Object Pascal, we needed to introduce something new. And that is what anonymous classes is all about.

What on earth are anonymous classes?

In short, an anonymous class is essentially an object instance that is created “ad-hoc” where you define it. Please keep in mind that the class we are talking about here is not a pascal class type, but a JavaScript object instance. So in Delphi terms this is closer to a managed record than an object instance.

The syntax could not be simpler:

The above snippet will create a JavaScript object containing the field structure we have defined between the class and end keywords. The reason for this somewhat spartan approach is because JS expects these types of structures as parameters practically everywhere – and we wanted to keep things as short as possible. Having to pre-define a type would defeat the entire purpose, and a constructor call or new keyword would just get in the way.

You can omit defining datatypes for the fields (optional), as long as the data is compatible with whatever the DOM is expecting it will work just fine.

To show you how elegantly this solves integration -let’s deal with a real-life example, like the HTML element.animate() method.

Parameters simplified

Before we look at the code, keep in mind that all QTX widgets (visual controls) manages a underlying HTML element for the duration of it’s life-cycle. Meaning that the widget code will create, maintain and ultimately destroy the HTML element that visually represent it. Just like the VCL does for WinAPI controls.

The default element for TQTXWidget is the <DIV> element. Different widgets override this and create other browser element types. You can access the element directly via the Handle property, which is a direct reference to the element managed by the widget code. This is the exact same approach that the Delphi VCL uses, so it should be second nature to Delphi and Freepascal developers.

So when you want to work directly with the element, you can either just use the Handle directly, or typecast it to JElement for simplicity (all DOM types are prefixed with J in our RTL).

Since THandle derives from variant, you dont really need to typecast; you can just write the methodname directly, a bit like how you can call COM methods through a Delphi variant reference. In such a case you have to make sure the method or property is spelled correctly (JavaScript is case sensitive) and that the parameters match.

Both of these syntaxes can be used, just like COM:

- self.Handle[“someMethod”](param1, param2);

- self.Handle.someMethod(p1, p2);

To make things easier I suggest you typecast to JElement, so that you get the benefit of code suggestion and parameter hints. The JElement definition is a bit spartan, I only defined what I needed to write the RTL. I will re-visit these types when I have more time and define them completely. The animate() definition will be there in the update this weekend.

But let’s get back to the example, namely the Element.animate() method! It is defined as such:

element.animate(keyframes[]; options);

In Object Pascal syntax it would look like this:

procedure JElement.animate(keyframes: array of variant; options: variant);

If we look at the developer documentation for this method, we see that the “array of keyframe” (first parameter) expects an array of keyframe structures. The way animation works in a browser, is that you define how an element should look or be positioned when an animation starts, and then how it should look or be positioned when the animation ends. Based on how long the animation runs the browser figures out whatever frames should appear between keyframes.

The simplest animation would have a start and stop keyframe, but you can define as many keyframes as you like. This type of animation is called “tweening”. The QTX runtime library actually implements it’s own tweening (qtx.dom.tween.pas) engine, which will be used as a fallback mechanism for browsers that dont support the animate() method. If you dont quite understand how tweening works, you should open up that unit and learn from it.

The challenge for us, is that you can animate more or less every css property HTML5 supports, which means it becomes impossible for us to define a traditional object pascal type for the keyframe parameter. At least not without boxing developers in or adding some overhead.

Unassigned is important

The thing about JS and data structure parameters is -that you should only name and assign values to properties you actually use. Most JS framework when dealing with structures, will just check if a named field exist, and further if it’s value is unassigned or not. Think variant programming in Delphi here and how null does not mean empty, only unassigned means empty.

So whenever we work with such structures, we must never declare fields we dont actually use and absolutely not assign values to them. Doing so will wreak havoc, because the browser would interpret this the wrong way and apply them, even if you just set them to null (!). And that is why we should not define a JKeyframe type because a strict type could yield unexpected behavior.

Let’s look at how JavaScript developers would call the element.animate() method:

This is where anonymous classes comes in and saves the day for us. Since it is “anonymous” that means there is no type declaration or consequent field checking involved. You essentially type the object structure ad-hoc where you need it – with the values you need.

Here is how you would call the element.animate() method from Quartex Pascal:

Use it when needed

The usefulness of anonymous classes should be self-evident to everyone:

- First, we get to co-exist with JavaScript without having to manually construct our parameters before calling. It strikes a compromise between structure and efficiency that is optimal imo.

- Secondly, these class instances are thin and requires less memory than a TObject based instance. There simply is no overhead and the generated code is 1:1 Javascript with no magic infrastructure.

- Third, we can read and use standard HTML documentation without having to jump through hoops or manually construct JavaScript object parameters. No asm section is needed.

- Fourth, a JS object is a JSON object by nature, anonymous classes can be used when calling methods that expects a JSON object — and you can serialize / parse them on the spot (!). This affects a lot of scenarios, especially working with REST calls

The only downside is that you cannot inherit from these structures since they are defined ad-hoc where you use them. This kind of definition was tailor made for integrating with JS, like many other aspects of the DWS / QTX dialect.

You can use them in your pascal widget code too, there is nothing stopping you. However, if the data is uniform and has a fixed structure (and initialization of fields is not an issue, like it is under most JS frameworks), you might want to consider a Record type or JObject based class definition to save yourself potential naming errors. The downside of anonymous structures and named fields is that JavaScript is case sensitive. This means that even a single letter typo can be enough to cause havoc in your code. And you end up searching for hours to find that one place where you used an uppercase rather than a lowercase.

Traditional object pascal declarations saves you from this through strict typing and uniform naming. That is one of the benefits of compiling from object pascal to JS, namely that you dont have to deal with all of that. But when working with raw JS calls, much like you would call WinAPI directly under the VCL, data structures have to meet standards.

What about inheritance and more complex structures?

For inheritance (read: building up complex JS structures) where TObject cannot be used, you can base a class-type on JObject – which you declare more or less as a traditional object pascal class:

To create an object instance of a JObject based class, you use the new keyword:

var myObj := new JMyStuff2();

Another note is about obfuscation. Anonymous classes and JObject derived classes survives obfuscation. The compiler makes sure the member names of such classes are not ruined by the obfuscator (see build options). Obfuscation is basically scrambling the JS so it becomes unreadable and more compact, to protect intellectual property. It’s important to keep this in mind. All TObject members are renamed and scrambled, which protects their naming and logic – but this makes them unsuitable as parameters to JS frameworks which expects fields to be named according to a scheme. TObject also has a VMT which means you dont want to use it for parameters to begin with.

To sum up: Use anonymous classes for ad-hoc structured parameters. Or, inherit your traditional classes from JObject if you need strict typing and pseudo inheritance. These are both compatible with standard JavaScript.

QTX is about balance between these two realities. You have the rigid, strict and traditional Object Pascal world in one hand– and the flamboyant, ad-hoc world of JavaScript in the other. Within the QTX dialect we (read: Eric Grange and myself) tried to forge a compromise that brings the best of both languages together. Most of the modern features that DWS and QTX supports came out of our initial exploration back in 2010 to 2012. The first year we would debate features almost daily, trying new things and thinking about stuff we could adopt from other languages that works well with pascal. We had a great time and (imo) came up with a dialect that is excellent for web development.

Notes

This article was first posted on my Patreon project website. If you find QTX interesting, please consider backing the project. You can visit the Patreon website here: www.patreon.com/quartexnow, and you can visit the official website to read more about Quartex Pascal in general here: www.quartexdeveloper.com.

Curious VCL snippet brainfart

I was poking around the VCL system.math unit yesterday when I came across a curious function implementation. Not curious because of complexity, but rather baffling as to why they would implement it like this. While the performance gain in question is insignificant in the great scope of things, the backstory here is that the VCL is full of similarly written code. Code that, when you sum up the penalty on application level, becomes quite considerable.

So while this little snippet is meaningless, it is symptomatic for the maintenance of the VCL these past 15 years. I simply don’t understand why they would let code like this remain when performance improvements are in such high demand.

So what is the problem you ask? Well, “problem” is not the right word for it, baffling is closer to what I feel when I look at these functions. So let me sum up what I see when I look at this code:

- The code allocates two variables for a piece of logic that has absolutely no need for it

- Dividing these two simple expression into separate blocks makes my eyes hurt

In other words, the code that immediately comes to mind for me would be:

If you are pondering why I would use the $B+ (complete Boolean evaluation) compiler switch on this, there is a reason for that. Namely that the compiler wont have to divide the logic into a two piece code-block, and further having to add a branch instruction to exit the block if the first evaluation was false (I know I’m neckbearding this right now).

In short: The code above is actually faster and ensures both expressions are solved on the stack. No variable allocation needed and no adjustment of the stack-page boundary.

Symptomatic?

The snippet above is obviously insignificant when you look at it isolated. It barely justifies writing this text to explain it. But over the past decade the VCL has begun to annoy me a bit, because there are literally thousands of such snippets all over the VCL. Some of you might remember a homebrew project called “the Delphi unit replacement project” from way back? Where some guy took the time to refactor the standard Delphi VCL units (which obviously broke a few laws). It was nothing too elaborate either (no assembler or anything super low-level), just relatively simple refactoring like I demonstrated above, except he did that to every function and procedure in the non-visual scope of the VCL. And to my utter amazement those units provided as much as 30 – 40% performance gain for average applications. In other words, if you recompiled your application using his units, your program could run up to 40% faster.

I honestly did not believe it until I saw it myself.

A lot has been done to improve the VCL in the past 8 years, which is why I find it strange to discover sloppy code like this in a unit literally named “mathematics”. That unit should be optimized to the bone. I mean, just look at what the C/C++ guys are enjoying in their standard libraries, where every inch of the RTL is optimized for performance. The Delphi compiler is just as capable of generating high performance code, but obviously it cant magically convert wasteful code into gold either.

So during lunch yesterday I took 3 minutes to just make sure I was correct. Again, this post is not really about the above function, but rather the sheer volume of such waste in the VCL. I remember when Delphi was the fastest kid on the block, and it just annoys me that – knowing how well Delphi can perform, that eyesores like this openly lingers in the product.

While the potential savings here is nothing in a real-life scenario, and barely worth mentioning — when you suddenly have thousands of such snippets (if not tens of thousands RTL wide), you cant help but think that Embarcadero could put more efforts into general optimization.

I mean, remembering that homebrew project (illegal as it might have been) and seeing as much as 40% performance gain? You cant help wondering how Delphi could perform when given the same attention to detail as the first versions of Delphi received. A 30-40% speed boost would put Delphi en-par with cutting edge C and C++, which is optimized to the absurd. Add LLVM on top of that and it would fly.

It would be fun to see what LLVM would do with that stock InRange() code. I can only speculate but I’m pretty sure it would end up as a simple stack operation with L3 optimization enabled.

</end of brainfart>

QuartexDeveloper.com is now active

It’s taken a while but Quartex Pascal now has it’s own website and forum. You can visit QuartexDeveveloper.com and check it out.

The SSL certificates are being applied within 72hrs according to the host, so don’t be alarmed that it shows up under HTTP rather than HTTPS right now – that is just temporary.

Up until now we have operated with a mix of donations and Patreon to help fund the project, but obviously that model doesn’t scale very well. After some debate and polls on the Facebook group I have landed on a new model.

Funding and access model

Starting with the release of version 1.0, which is just around the corner – the model will be as such:

- Backing and support will be handled solely through Patreon

- Facebook group will become open for all

- Patreon tiers will be modified to reflect new model

- Main activity and news will shift to our website, quartexdeveloper.com

- Community build will be available from our website

- Commercial license will also be available from our website

So to sum up, the following 3 options are available:

- Back the project on Patreon, full access to the latest and greatest 24/7

- Community edition, free for educational institutions and open-source projects (non commercial)

- Commercial license is for those that don’t want to back the project on a monthly basis, but instead use the community edition in a professional capacity for commercial work.

With the community edition available, why should anyone bother to back the project you might ask? Well, the public builds will by consequence be behind the latest, bleeding edge builds since the community edition is only updated on minor or major version increments (e.g. when version changes from 1.0 to 1.1). Users who back the project via Patreon will have instant access to new documentation, new packages with visual components, new project templates, RTL fixes and patches as they are released. These things will eventually trickle down to the community edition through version increments, but there is a natural delay involved.

This is how most modern crowd funded projects operate, with LTS builds (long term support) easily available while the latest cutting edge builds are backers only. Documentation, fixes and updates to components, new component packages, hotfixes and so on – is the incentive for backing the project.

This is the only way to keep the ball rolling without a major company backing day to day development, we have to get creative and work with what we got. Projects like Mono C# had the luxury of two major Linux distribution companies backing them, enabling Miguel de Icaza to work full time on the codebase. I must admit I was hoping Embarcadero would have stepped in by now, but either way we will get it done.

Onwards!

When Nicola Tesla slaps you over the head

If you have poked around the Quartex Pascal RTL you might have notice that QTX comes with a serial-number minting system. Creating unique serial numbers that can be mathematically re-traced back to a root key (number sequence) is extremely tricky business. My solution was to dip my fingers into esoteric and occult numerology, because our ancient forbearers had a knack for puzzles and number based riddles.

And here I thought I was super clever, only to discover that Nicola Tesla scribbled a similar system on a napkin back in the late 1800s (figuratively speaking). Turns out that the basis of my system is more or less identical to Tesla’s numbers and ultimately bound by their relationships, where you operate with a growth factor that is a multiple of 12, modulated and held in check by Fibonacci, Lucas or Leonardo sequencing.

So my ego got a well deserved slap (which is always healthy, we should all be humble in the presence of that man).

I have never really been that interested in Tesla until recently, and the little I have read about him makes me incredibly sad. This man was not decades ahead of his time, but centuries.

In my view, the biggest tragedy in human history is without a doubt the loss of the great library in Alexandria, Egypt. Second only with the murder of Hypatia; a series of events that would eventually catapult humanity as a whole into a dark-age that lasted for 2000 years.

But having spent some time this morning reading about Tesla, I would add him to that list of tragic events that have affected our history (or in his case, being prevented from lifting mankind up). This is a man that constructed the walkie-talkie in the late 1800s. He even theorized that both audio and video could be transmitted over a hand-held device. And this was in the late 1800s (!).

Above: The serial-number minting dialog from the IDE. Here we use 12 seed numbers to form the root key, and each serial number is grown from these using natural numbers, as employed by various mystics and esoteric traditions.

Hat off Tesla. It is a great shame that you were born into a world that neither understood or appreciated the wonders you delivered.

Nicolas Tesla’s notebooks is best read on your knees.

Now I need to scotch tape my ego back together and get to work.

HTMLComponents for Delphi, a closer look

For a while now I have been paying attention to Alexander Sviridenkov’s components for Delphi. First of all because Delphi doesn’t really have that many options when dealing with HTML beyond embedding the classical browsers (Chromium, Firefox or Edge); And while Dave Baldwin’s work has served the community well in the past, TFrameBrowser is 20 years old. So there is definitively room for improvement.

Secondly, in my work with Quartex Pascal, a system that compiles Object Pascal to JavaScript for HTML5, being able to work with HTML and render HTML easily from my IDE is obviously interesting. The form designer in particular could benefit from showing “live” graphics rather than representative rectangles.

All of that is possible to do with Chromium if you run it in an off-screen capacity, but getting good results is very tricky. Chromium Embedded runs in a separate thread (actually, multiple threads) and sharing video memory, injecting HTML to avoid a full reload — lets just say that a Delphi native component package would make all the difference. Enter HTMLComponents.

Focus on the essentials first

The way that Alexander has proceeded with his components can resemble my own philosophy (or indeed anyone who has been a developer for a while). It’s the kind of work approach you end up with through experience, namely, to start with the basics and make sure that is rock solid (read: focus on the foundation code, that’s what’s going to matter the most. Trust me). It’s so tempting to run off on a tangent, adding more and more functionality – typically visually pleasing stuff, but as mature developers will know, if you go down that path what you end up with is a very expensive mess.

Thankfully, Alexander has gone about his work in an orderly, no-nonsense way. He began with the HTML parser, making sure that was flexible, compliant and delivered great performance (over 100 Mb a second!). Then he moved on to the CSS part of the equation and implemented a high performance styling engine. The reason I outline this is because I don’t think people fully grasp the amount of work involved. We think of HTML as a simple tag based format, but the sheer infrastructure you need to represent modern HTML properly is enormous. There is a reason Delphi component vendors shy away from this task. Thankfully Alexander is not one of them.

Scripting?

Next we have the scripting aspect. And here is the twist, if we can call it that. HTMLComponents is not written to be a browser. It is written to enable you to render HTML5 at high speed within a native application, including CSS animations and Tweening (a technique made popular by Apple. Like sliding forms or bouncing swipe behavior).

In other words, if you are expecting to find a browser, something like Dave Baldwin’s now ancient TFrameBrowser, then you should probably look to the new TEdgeBrowser component from Embarcadero. So JavaScript is not yet natively supported. HTMLComponents falls into the category of a UI presentation framework more than a browser.

If however, like myself, you want to handle presenting HTML5, PDF, RTF and Word documents without a ton of dependencies (Chromium adds 150Mb worth of libraries you need to ship), provide your users with a compliant HTML WYSIWYG Editor – and also deliver those fancy animated UI elements – then you are going to love HTMLComponents.

I should mention that HTMLComponents has its own scripting engine, but it’s not JavaScript. But for those situations where a script is needed, you can tap into the scripting engine if you like. Or deal with everything natively. It’s your choice.

Document editor

The reason I mentioned Alexander’s architecture and how his codebase has evolved, is because a high performance document rendering engine can be very useful for a variety of tasks.

One thing is rendering HTML5 with all the bells and whistles that entails, but what about RTF? What about Word documents? What about PDF documents? Once you have a rock solid engine capable of representing HTML5, the next logical step is to branch out and work with the other formats of our times. And that is just what Alexander did.

But before we look at those features, let’s have a peek at what components you get.

As you can see from the picture above, HTMLComponents is not just about drawing HTML. Being able to represent HTML is useful in a variety of situations since it simplifies visual feedback that would otherwise be very time consuming to implement. So instead of limiting that power to a single control, HTMLComponents come with common controls that have been infused with superior graphical powers.

The most powerful component in the above list is without a doubt the HTML editor component (also notice that the package installs both standard and DB variations of the various controls). This is quite simply a fully compliant WYSIWYG editor – complete with all the formatting features you expect.

- WYSIWYG editing.

- Does not use IE or other libraries (100% native Delphi code).

- Supports all Delphi versions from Delphi 5 to Delphi 10.4 Sydney.

- Supports Lazarus (Windows/Linux)

- VCL (Win32/64) FMX (Windows / OSX / Android / iOS / Linux)

- Full support for touch-screen devices – gestures, text selection (Windows Tablets/Screens, iOS, Android, OSX)

- Smooth scrolling on Android and iOS.

- Unicode support for Delphi 6 – 2007 (requires TNTUnicode).

- Scalable (High DPI support).

- Live spellchecking and autocorrection (built-in support for Addict).

- Live text styles preview (font family,size, color, background).

- RTF and MS Word DOCX Import on all platforms.

- PDF export on Windows, Android, OSX and iOS.

- DB-Aware version

- Full support for HTML tags and CSS properties.

- Full access from Delphi code to DOM and Styles.

- Images, lists, blocks, font styles

- Tables support

- Print and Print Preview

- Embedded Find dialog, Text search, Document Index generation.

- Copy from/paste to MS Word, browsers and other applications

- Embedded Markdown, Pascal and HTML syntax highlighting.

- HTML-based editor controls (HtFontCombo, HtFontSizeCombo, HtColorCombo, HtTableAddCombo, HtBorderCombo, HtTableBorderCombo)

That is a solid list of features, and did I mention you get full source-code?

HTML empowered controls

If you are looking over the list of controls above and expecting to find something like a browser or viewer control, you won’t find it. The closest thing to a HTML viewer is the panel control (THtPanel). It exposes properties and methods to populate it with HTML (as does all the controls), set what type of scrollbars you need (if any), how to deal with links, images and CSS styling – and then it’s a matter of feeding some HTML into the control.

Obviously controls like THtCombobox have behavior that is dictated by the OS, but you can style the child elements (rows for example) being displayed, the border etc. using the full might of HTML5. And yes, you can apply CSS transitions there as well – which is (excuse my french) bloody spectacular!

I mentioned that HTMLComponents were not designed to be a browser replacement, but rather to make it easier for native developers to tap into the design power and visual feedback that makes HTML5 so productive to use for UIs. Well, once you have set the properties for a panel and given it some HTML -you can do some pretty amazing things!

HTML takes a lot of grunt work out of the equation for you. For example, let’s say you wanted to produce a demo like the one in the picture above (see direct link in the next paragraph). With all the effects, transitions, pictures and displacement elements. Just how much work would that be in traditional Delphi or C++ ?

Note: You can download the Demo application directly, here:

https://delphihtmlcomponents.com/csstransforms.zip

First you would need a panel container for each picture, then a canvas to hold the picture, then you would need to handle the interaction events- and finally access the canvas to draw the many alpha blended transitions (the picture here really doesn’t do the framework credit, you have to see them to fully appreciate the level of detail and performance HTMLComponents delivers). And all of that is before you have new elements flying in from the sides or above, that fades perfectly with the backdrop. All of it working according to a timeline (tweening as its called).

Instead of all that work, having to write a tweening engine, 32 bit alpha-blending DIBs (device independent bitmaps), deal with god knows how much work — you can just deliver some HTML and CSS and let HTMLComponents handle all of it. With zero external dependencies I might add! This is a pure Delphi library. There are no references to external browser controls or anything of the kind. HTMLComponents does exactly what it says on the box – namely to render HTML5 at high speed. And it delivers.

Here is the HTML for one of the pictures with effects in the demo:

<div class="view view-sixth">

<img src="images/13.jpg" />

<div class="mask">

<h2>Hover Style #6</h2>

<p>A wonderful serenity has taken possession ..</p>

<a href="#" class="info">Read More</a>

</div>

</div>

And here is the CSS animation transition code for the same. Please note that the original code contained definitions for IE, Opera, Webkit and Firefox. I removed those for readability:

.view-sixth img {

transition: all 0.4s ease-in-out 0.5s;

}

.view-sixth .mask {

background-color: rgba(146,96,91,0.5);

filter: alpha(opacity=0);

opacity: 0;

transition: all 0.3s ease-in 0.4s;

}

.view-sixth h2 {

filter: alpha(opacity=0);

opacity: 0;

border-bottom: 1px solid rgba(0, 0, 0, 0.3);

background: transparent;

margin: 20px 40px 0px 40px;

transform: scale(10);

transition: all 0.3s ease-in-out 0.1s;

}

.view-sixth p {

filter: alpha(opacity=0);

opacity: 0;

transform: scale(10);

transition: all 0.5s ease-in-out 0.2s;

}

.view-sixth a.info {

filter: alpha(opacity=0);

opacity: 0;

transform: translateY(100px);

transition: all 0.3s ease-in-out 0.1s;

}

.view-sixth:hover .mask {

filter: alpha(opacity=100);

opacity: 1;

transition-delay: 0s;

}

.view-sixth:hover img {

transition-delay: 0s;

}

.view-sixth:hover h2 {

filter: alpha(opacity=100);

opacity: 1;

transform: scale(1);

transition-delay: 0.1s;

}

.view-sixth:hover p {

filter: alpha(opacity=100);

opacity: 1;

transform: scale(1);

transition-delay: 0.2s;

}

.view-sixth:hover a.info {

filter: alpha(opacity=100);

opacity: 1;

transform: translateY(0px);

transition-delay: 0.3s;

}

If CSS is not something you normally don’t bother with, the code above might look complex and alien. But there are tons of websites that have wizards, tutorials and even online editors (!), so if you take the time to read up on how CSS transitions work (they are quite easy), you will knock out some impressive effects in no time.

Once you have built up a collection of such effects, just link it into your Delphi application as a resource if you don’t want external files. Personally I think its a good thing to setup the UI in separate files like that, because then you can update the UI without forcing a binary installation on your customers.

So if we consider the amount of Delphi code we would have to write to deliver the same demo using stock VCL, sum up the cost in hours – and most likely the end result as well (Alexander is exceptionally good at graphical coding), I for one cant imagine why anyone would ignore HTMLComponents. I mean serious, you are not going to beat Alexander’s code here. And why would you waste all that time when you can buy ready to use controls with source-code for such a modest price?

Office formats

I mentioned briefly that with a powerful document rendering engine in place, that the next step of the way would be to cover more formats than just HTML. And this is indeed what Alexander has done.

If you invest in his Add-On Office package for HTMLComponents, you will be able to load and display a variety of document formats. And just like HTMLComponents the code is 100% Delphi and has zero dependencies. There are no COM objects or ActiveX bindings involved. Alexander’s code loads, parses and converts these documents instantly to HTML5, and you can view the results using HTMLComponents or in any modern browser.

Following document formats are supported:

- Rich Text Format (RTF)

- MS Word 6-2007 binary format (DOC)

- MS Word XML document (DOCX)

- MS Power Point binary format (PPT)

- MS Power Point XML format (PPTX)

- MS Excel binary format (XLS)

- MS Excel XML format (XLSX)

- Adobe PDF format (PDF)

- Supercalc format (SXC)

- EPUB (electronic books).

Besides the document conversion classes you also get the following code, which is pretty hard-core and useful:

- EMF/WMF to SVG conversion

- TTF to WOFF conversion

- TTF normalization

- TTF to SVG conversion

- CFF to TTF conversion

- Adobe PostScript to TTF conversion.

For me this was a god-send because I was using Gnostice’s PDF viewer to display the documentation for Quartex Pascal in the IDE. Being able to drop that dependency (and cost!) and use HTMLComponents uniformly throughout the IDE makes for a much smaller codebase – and cleaner code.

Final thoughts

The amount of code you get with HTMLComponents is quite frankly overwhelming. One thing is dealing with a tag based format, but once you throw special effects, transitions and standards into the mix – it quickly becomes a daunting task. But Alexander is delivering one of the best written component packages I have had the pleasure of owning. If you need a fresh UI for your application, be it POS, embedded or desktop utilities – HTMLComponents will significantly reduce the time spent.

I should also underline that HTMLComponents also works on FMX and Mobile devices ( Windows, OS X, Android, iOS and Linux even!). I’m not a huge fan of FMX myself so being able to design my forms using HTML and write event handlers in native Delphi is perfect. FMX has a lot of power, but the level of detail involved can be frustrating. HTMLComponents takes the grunt out of it, so I can focus on application specific tasks rather than doing battle with the UI.

The only thing I would like to see added, is support for JavaScript. HTMLComponents makes it easy for you to intercept scripts and deal with them yourself (HTMLComponents also have a pascal inspired script), but I do hope Alexander takes the time to add Besen (a native Delphi JavaScript engine) as an option. It really is the only thing I can think of in the “should have” apartment. Everything else is already in there.

I have to give HTMLComponents 9 out of 10 stars. It would have scored a perfect 10 with JS support. But this is the highest score I have ever given on my blog, so that’s astronomical. Well done Alexander! I look forward to digging into the office suite in the weeks ahead, and will no doubt revisit this topic in further articles.

Visit Alexander’s website here: https://www.delphihtmlcomponents.com/index.html

Delphi and the absolute keyword

There is a lot of hidden gems in the Delphi environment and compiler, and while some might regard the “absolute” keyword as obsolete, I could not disagree more; in fact I find it to be one of the most useful, flexible aspects of Delphi (and object pascal in general).

The absolute keyword allows you to define a variable of a specific type, yet instruct the compiler that it should use the memory of another variable. I cannot stress how useful this can be when used right, and how much cleaner it can make code that deal with different classes or types – that are binary compatible.

Tab pages revisited

Unlike most I try to avoid the form designer when I can. Im not purist about it, I just find that inheriting out your own controls and augmenting them results in significantly faster code, as well as a fine grained control that ordinary DFM persistence can’t always deliver.

For example: Lets say you have inherited out your own TPageControl. You have also inherited out a few TTabSheet based classes, populating the tabsheets during the constructor – so there is no design data loaded – resulting in faster display time and a more responsive UI.

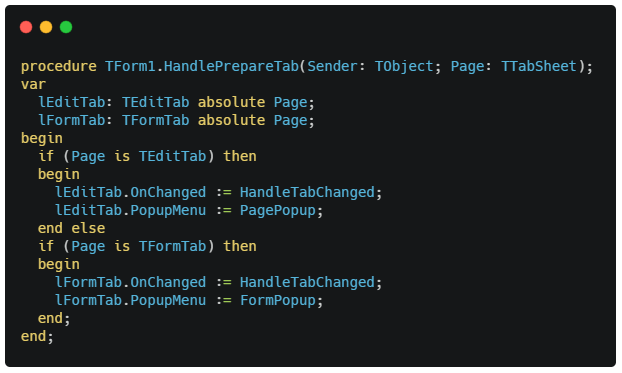

In one of my events, which is called as TabSheet class is created, allowing me to prepare it, like set the icon glyph for the tab, its caption and so on – the absolute keyword makes my code faster (since there is no type-casting) and more elegant to read.

All I have to do is check for the type, and once I know which type it is, I use the variable of that type that share memory with the initial type, TTabSheet. Like this:

Obviously this is not a live example, its written like this purely to make a point. Namely that the Page parameter can be accessed as a different type without allocating variables or typecasts. Im sure there are some memory use, but i find the above more elegant than 3 x nested if/then/else before you can even touch the pointer.

While this is just a small, miniscule -bordering on pseudo example, the use of absolute can help speed up intensive code by omitting typecasts. Perhaps not above, but in other, more intensive routines dealing with graphics.

It is actually a tremendous help when dealing with low level data conversion (like dealing with 8, 15, 16, 24 and 32 bpp data. When you call routines thousands of times, every bit helps – and absolute is one of those keywords that saves a few cycles per use.

Absolute is definitely one of Delphi’s un-sung heroes. But it’s a scalpel, not a chainsaw!

Freedom of speech vs A.I, where does this end?

I must admit I am a bit upset while writing this, but I think I speak for quite a few in what I am about to say. Namely, that the Facebook police must come to an end. It has gone too far, and it’s now infringing on not just American laws, but also violating international laws regarding freedom of expression.

The great proxy

Here’s the problem with platforms like Facebook. First of all they are company based, which means they have the right to include or exclude whomever they like. It is a free platform after all, and nobody is forcing you to sign up for a Facebook account.

Last time I checked, that is Fascism, plain and simple

At the same time they have grown to such a size that they have become a significant social influence. Not having a Facebook account (or Twitter for that matter) in 2020 would be more out of place than the opposite. Facebook has become, despite its status as an independent financially driven organization, the global forum where people share their thoughts, ideas and aspects of their lives.

In other words, Facebook as an organization is free to meddle and influence the politics of the entire planet – without being held accountable. Neither to politicians or laws – or it’s users. Facebook can in other words – do exactly as they please, yet be held accountable to nobody.

Facebook was instrumental in manipulating the British election, and was likewise used as a weapon in the American election. That alone should say something about the power wielded by the platform. Yet somehow they wiggled their way out of it.

Users rights

As a user your rights are simply non-existent. You are completely at the mercy of an A.I (artificial intelligence) that will process whatever you say or post; and should the A.I determine that you have violated the end-user-agreement, it becomes your judge, jury and executioner.

This is simply unacceptable. There are millions (literally) of subtle nuances between languages around the world, and implementing an A.I to determine if a post is suitable or unsuitable is outright impossible.

You would have to master every language on earth, as well as have complete insight into the culture, current events etc. to make a fair ruling.

Speaking out against child abuse

In my example there was a rather nasty case of child molestation in the local news some 3 weeks ago, involving a group of religious extremists. At which my post simply stated

“I am sick and tired of religious extremists. Why does a house have to proverbially be on fire before governments put the flames out? This has to stop. Enough.”

Some 3 weeks later (today) while I was going over posts that had been reported in one of the many programming groups I manage (sigh, the irony), a banner suddenly comes out of nowhere, informing me that I have been sentenced to 7 days in Facebook jail for “hate speech”.

Hate speech? My jaw dropped. Wait, what! In what universe is wanting to protect children from predators deemed as hate? I just sat there biting my lip as I read the verdict of the artificial judge, because the idea of “hate speech” is a very serious accusation. People that post hate, in the true sense of the phrase, would (in my view) be something along the lines of neo-nazis, holocaust deniers, racists, homophobes or right-wing nationalists.

As a person who has voted to the left consistently for 30 years, who want children protected and religion kept personal; one that has six years of comparative religious studies behind him — I somehow find it very difficult to fit any of the criteria above.

I mean, im half Spanish, my best bud is a black gay man, I think WW2 and the atrocities should be compulsory in education, globally, so that we never forget what the nazis did, or the terrible price the world had to pay to secure liberty. I think the war on drugs is a lost cause, and if Michelle Obama ran for president, I would seriously consider immigrating to the US –just so I could vote for her.

So .. Not really a “hate-speech” kinda guy.

You don’t get a say

The biggest challenge in cases like this, is that there are no human beings involved. The second problem is that, under Norwegian law, criticism of religious organizations is allowed (if based on sober facts, otherwise it falls under slander). Now obviously I don’t run around confronting religions (I mean, who does), but what we are talking about here is public news, caught and dealt with by the police; a case where the predators thankfully got caught. As a parent, no – human being, I have nothing but disgust for such crimes, as I imagine all sane individuals have.

Speaking out against crimes in a lawful manner is a right. It is also a mechanism to make sure that nobody harbours resentment that, ultimately, leads to aggression. Censorship in 2020 is a dangerous mistake. One that can only end one way I fear.

And this is the problem with “corporate rulings” based on artificial intelligence. To be honest I doubt Facebook have an actual A.I involved at all. Based on this ruling, it is painfully obvious that they operate with basic keyword filtering (apparently 3 weeks behind schedule). If you cherry pick the words “sick”, “religious”, “fire” and “enough” and used some rudimentary value system for each word -perhaps in an attempt to establish the nature of a sentence, the outcome would be that the phrase is a negative one. But if you read it in its original Norwegian, where linguistic subtleties makes the intent evident – it is a man speaking out against abuse. Which is the opposite of hate.

But what really piss me off is that, as a user you have no way to complain. There is no human being you can talk with to provide a context. No message field you can use to write a short message. Nothing. The same case that I commented on was reported by all major Norwegian newspapers; It involves a crime in every civilized country on earth — yet critique of said crime somehow falls under “hate speech” according to “Facebook law”?

Amiga Disrupt

Well. I guess I’ll be over at the Amiga Disrupt Facebook clone this week. And I am going to spend that time contemplating if Facebook is really worth the effort. Most of my friends are on alternative forums too, so it’s not like I would miss out on much. I might even be tempted to write a mobile client for the AD website to make it more accessible.

You either respect free speech, or you don’t.

One thing is having fucking nazis running around the place spreading hate, another thing is when someone expresses their disgust for the recurring phenomenon that is abuse in authoritative religious settings. Whats next? Companies buying protection online? Sounds insane right, but that’s the next step. Mark my words.

One of the distinct differences between a free society and a fascist society is namely that: the right to express yourself peacefully. Another signature of fascism is their ability to wiggle their way through legal loopholes to avoid accountability.

If we setup a value system ourselves and apply it to some of these social-media companies, I think we all know what the verdict will be.

Food for thought!

QTX Framework for Delphi and FPC is available on BitBucket

The QTX Framework is a large and mature framework that is presently being organized and open-sourced. It’s essentially 20 years of hard work by me, that I am now consolidating and giving back to the community I love so much.

To make a long story short, I have had way too many hobby projects, to the point where my free time was reduced to zero. So for the past six months I have gradually reduced my life considerably (or the complexity of life), with the goal of just having one hobby (Quartex Media Desktop) and one steady job with no extra side projects.

But, instead of letting good code just sit there collecting dust, why not give it to the community that have shared so much with me? Feels like the right thing to do.

QTX Framework

The first unit to be released into open-source (MPL v2) is qtx.vectors.pas, which gives you four vital class types that have been missing from Delphi (and Freepascal). Classes that are extremely powerful and that should have been made a part of the RTL over a decade ago.

- TVector

- TView

- TBitBuffer

- TStorage

- TStorageMemory

- TStorageFile

- TStreamAdapter

- TPropertyBag

- TTyped (*)

- THash (*)

(*) Abstract utillity classes

As you probably know, C++ has enjoyed vectors (std::vector) for a long, long time. But I have taken the concept one step further, and coupled it with a unified storage system, so that the vector containers can operate in memory – and with files. So you can now work with large vectors both in memory and on disk. The system is largely expandable, so you can roll your own storage types (cloud, network etc) with relative ease. Same goes for vector allocator classes (for DB ORM style mapping).

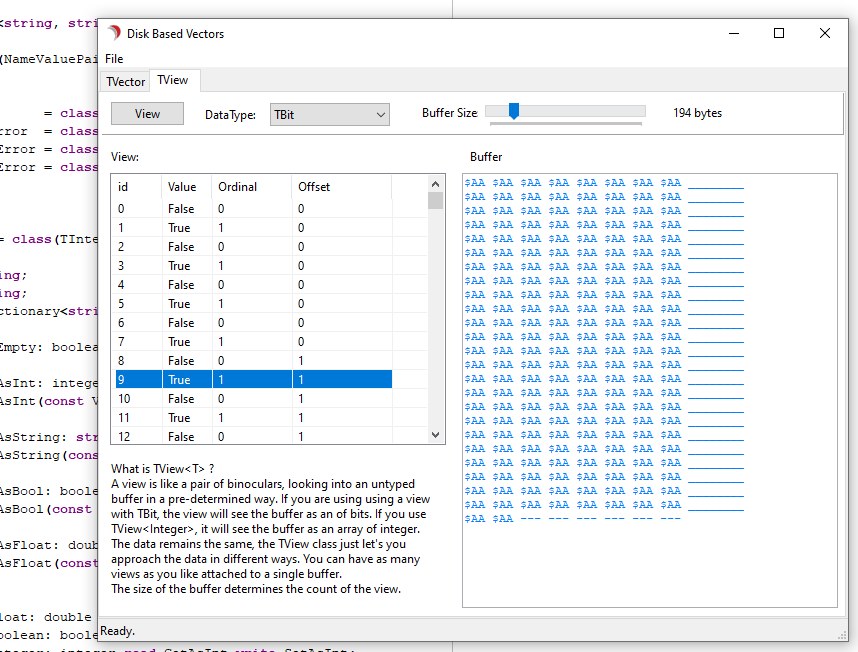

The storage system (untyped buffers) deals with pretty much everything you need to manipulate raw, binary data (and then some). And this is where TView comes in, where you can access a chunk of untyped data (regardless of its location in memory or disk) as an array of typed data.

TVector and showing live memory used by the vector container

I published an article on this over at the Embarcadero Community website, which can be useful if you dont fully understand the gravity of this:

https://community.idera.com/developer-tools/b/blog/posts/vector-containers-for-delphi

Why should I bother?

Let’s say you have 10.000 Pascal records (or 1000000 for that matter) you want to work with, but you dont want to keep them in memory. Vector containers are now ideal for this line of work thanks to the unified storage system:

If you remove records from the vector, the managed file is physically scaled and truncated

But hey, all of that is easy right? With the exception of live file management you can do this with a TList<T>. Fair enough. But what if you want to look at the data differently, not as TPerson, but rather as bytes or doubles (or another record type altogether)? And keep in mind, the data is managed in a file with no memory overhead – yet the interface remains the same:

Enumerating the bytes in the vector’s buffer, regardless of storage medium

This is just the tip of the iceberg. Vector containers gives you all the perks of old-school “file of record” but without the limitations and problems. Thats just one of many aspects.

Delphi and FPC

Since companies like TMS use Freepascal for their Web Framework, I made sure the code is compatible with both. And there will be plenty of Demos for both Delphi and FPC. Especially as more and more units are added to the framework.

The support for TBit in views is really neat, since you can then access any buffer as an array of bits (which bypasses the problem in C++ containers where TVector<bool> [which is their take on bits in this context] caused some headaches). Heck, the TStorage based classes alone is 3 times faster than TStream, and supports insert and remove functionality (literally scaling the file or memory with truncating if needed), so for binary formats, this should be heaven sent.

Add RTTI and some field-mapping, and you have an ORM engine that will outperform every option out there. Records are faster and more efficient than object instances. And a flat file database is now absurdly simple to make.

Freepascal demo showing how views work, here showing TView

Well, I hope you guys find this useful!

The next unit to be MPL’ed is the parser framework and a ton of parsers for various formats.

Cheers!

Vector Containers For Delphi and FPC

Edit: Version 1.0.1 has been released, with a ton of powerful features. Read about it here and grab your fork: https://jonlennartaasenden.wordpress.com/2020/04/13/qtx-framework-for-delphi-and-fpc-is-available-on-bitbucket/

If you have been looking at C++ and envied them their std::vector classes, wanting the same for Delphi or being able to access untyped memory using a typed-view (basically turning a buffer into an array of <T>) then I have some good news for you!

Vector containers, unified storage model and typed views are just some of the highlights of my vector-library. I did an article on the subject at the Embarcadero community website, so head over and read up on how you can enjoy these features in your Delphi application!

I also added FreePascal support, so that the library can be used with TMS Web Framework.

Head over to the Embarcadero Community website to read the full article

Coders and health in dire times

Below is a post I made on Facebook a while back that might be beneficial for people looking to boost their health or the body’s natural ability to defend itself. I am not a health specialist by any stretch of the imagination, but I have found a few things that have made a tremendous impact on my health over the years. Hopefully they can be as beneficial to others as they have for me.

I have experienced one life threatening, and one life changing situation in the past 20 years. The first was back in 2008 when I almost died of blood poisoning (overworked, immune system flat, caught pneumonia and a normal bacteria from my throat found its way into my bloodstream through my lungs). I was initially declared dead since i had no measurable pulse, but they managed to bring me back, but an inch away from death.

The life changing episode was when I slipped and fell down a flight of stairs while carrying a washing machine, severely injuring my spine in two places in 2013. With permanent nerve damage as a result (essentially it feels like being stabbed with a knife 24/7). It has taken 7 years to recover and learn to live with that injury. I evolved a regiment of tibetan based yoga and herbs that, combined, allows me to function without pain.

I get extremely upset when I see these new-age, mumbo-jumbo companies trying to capitalize on the present situation; selling so called miracle cures for the Corona Virus. It is an insult to those that have died from it, and it undermines both western and eastern medicine. It is an incredibly irresponsible and heartless thing to do.

There are plants that can help strengthen your body’s natural capacity to fight off infections, provide better stamina and clarity of mind – which is what my focus is on here. But under no circumstances does a miracle herb exist.

My post here is meant purely as a positive suggestion based on my experience, nothing more. Always check herbs properly, and make sure you use qualified sources when evaluating.

Note: ALWAYS consult your doctor before taking any supplements whatsoever. The ones I mention here are harmless if used properly (and have been used for thousands of years), but you must always treat potent medicinal plants with respect.

Post from Facebook

A friend of mine asked me how on earth I managed to survive my spinal injury. In the immediate years after the accident the doctors pump you full of various medication, mostly painkillers, which has a terrible effect on both body and mind. A lot of people never recover from such an accident, and never get up. They end up entangled in a web of medication and pain, even after the injury itself has largely healed.

A friend of mine asked me how on earth I managed to survive my spinal injury. In the immediate years after the accident the doctors pump you full of various medication, mostly painkillers, which has a terrible effect on both body and mind. A lot of people never recover from such an accident, and never get up. They end up entangled in a web of medication and pain, even after the injury itself has largely healed.

After the accident I was unable to walk for roughly 6 months. I was ripped before the accident with solid 2 hr workouts 5 days a week. After the accident, I was helplessly confined to bed. I even needed help to shower and go to the toilet (a terribly humbling experience).

But, instead of succumbing to fear and depression – I decided to spend my time putting all those alternative remedies to the test. I spent between $5000 and $6000 systematically testing every single so-called “miracle herb” I could find.

No such thing as a miracle herb

As I expected, 99% of them had absolutely no effect on my situation whatsoever. None.

Since I belong to the Tibetan Buddhist tradition, I decided to seek advice from both the Hindu and Buddhist communities in the region (both schools of though have long traditions in natural medicine), and I was given a list of herbs to use. It took a couple of weeks for the herbs to arrive from India, but i’m glad they did – because out of all the claims out there, these actually had an immediate and very tangible effect on the body. I must admit I did not expect that after so much hum-buggery.

Out of those I tested that were available in local health stores, where one is imported from the US (Green Magma), only one other passed the test (and I tested almost 200 different herbs, mixtures and substances. As much as 90% of them were useless; at least compared to the claims made for their efficiency).

Never play around with herbs, always be careful and remember that they can have a tremendous effect on our bodies and mind. Always talk to your doctor first.

These are the only 4 that actually worked – and that provides stamina, energy, alertness and gives the body’s natural defenses a boost. And I mean this very literally. Within 20 minutes of eating GM (#4) my body went from being an acidic hell, into feeling strong and normal again.

- Ashwagandha (a tree bark)

- Shilajit (mineral substance from himalaya)

- Vita Biosa (herbs and digestive bacteria)

- Green Magma (green juice from barley leaves)

Lets have a quick look at each of them. They are easy enough to google, and all of these are easily available through Amazon (unless you have a dedicated Ayurvedic outlet locally).

Vita Biosa

Vita Biosa contains the good-kind of bacteria, minerals and food herbs we once had in our diet before we started machine processing. These are the bacteria, minerals and food herbs that our digestive system is supposed to house (our digestive system has not changed since before the last ice age, some 14000 years ago) – but which machine processing have largely eliminated.

For modern man, a teaspoon of this will make you sick for days if your not accustomed to it. Start with 1/3 teaspoon in water, then gradually work your way up to 1 teaspoon a day. These bacteria will eat all the nasty stuff that modern life leaves behind. I had a bleeding ulcer because of the heavy medication I was put on. It took only 5-6 days with Vita Biosa and the bleeding stopped. Two weeks and the body had healed itself (!).

Note: If you can, buy the one with a small fraction of St.John’s herb, this is the only herb that has a proven effect on liver cells (helps the liver re-generate). Vita Biosa is worth its weight in gold, and the effect on your digestive system (and by consequence: mood and alertness) is remarkable. I literally had no idea how much our gut affects our thoughts, emotions and energy levels.

Green Magma

Green Magma is a Japanese formula that was used after WW2, and it was used to treat patients affected by the devastating impact of nuclear war. Its core ingredient is green barley sprouts that is pressed and the juice is dried into a powder that you can mix with water (there is more to it than that, but that’s the gist of it).

It’s also one of the few herbs that the body will recognize as food. If you can’t find the energy to get up, or you feel like you are burning out -order this immediately. It also eliminates acidity which is often a side effect of western medication, stress and unhealthy diets.

Drinking a glass (1 ts in water) for a week or two is enough. You will notice when you don’t need it anymore (you won’t crave it).

Shilajit

Shilajit is a natural mineral substance that comes out of the ground in the Himalayas. It was noticed by researchers because the inhabitants in the region generally enjoyed longer lifespans than what is average in the west, and they don’t suffer as much sickness as we westerners do.

It smells horrible, tastes even worse — so order the gel-capped variations if you can. You will notice a boost in energy and overall vitality (relative to your current health of course). I couldn’t event check my mailbox without taking breaks after my accident, so I could feel the effect of these things very fast. And this is 100 times more potent than any vitamins you find at a pharmacy.

Ashwagandha

Ashwagandha is a tree bark and root. It is known for the stamina and energy it provides. It is used for healing in India, Tibet and parts of Asia.

It’s also used by older men who wants to conceive children in their golden years, so use this with caution and stick to the recommended dosage. This herb does wonders for the immune system, and it provides a great deal of mental clarity and calm under stress. It is used for a variety of illnesses, from arthritis to infections. This is a very potent adaptogen.

If I were to pick just two of these, I would pick Ashwagandha and Green Magma, those two have helped me through some of the worst challenges in my life.

The most effective was also the most affordable

The bonus is – these herbs are very cheap and available almost anywhere. When I first discovered them they were less known, but since that time knowledge of them has spread. You can now order all 4 of these combined via Amazon for less than $100. And they will do 1000 times more good than any new age miracle cure nonsense. These are herbs that will help you for a lifetime if you remember to take them regularly.

So if you are looking to help your body to defend itself, or some go-to herbs to help you cope with deadlines – then these are reliable and will serve you well. For me personally, they have been the difference between being able to function and work – or not functioning at all. They literally changed my life.

So instead of raiding your local pharmacy, buying 10.000 rolls of toilet paper, or letting scam-artists sell miracle cures that never works -get these 4 reliable herbs, make sure you read the recommended dosages (talk to your doctor first if you have a condition or take medication) and then safely and calmly stick to the quarantine plan.

Stay healthy and safe, and listen to your doctor!

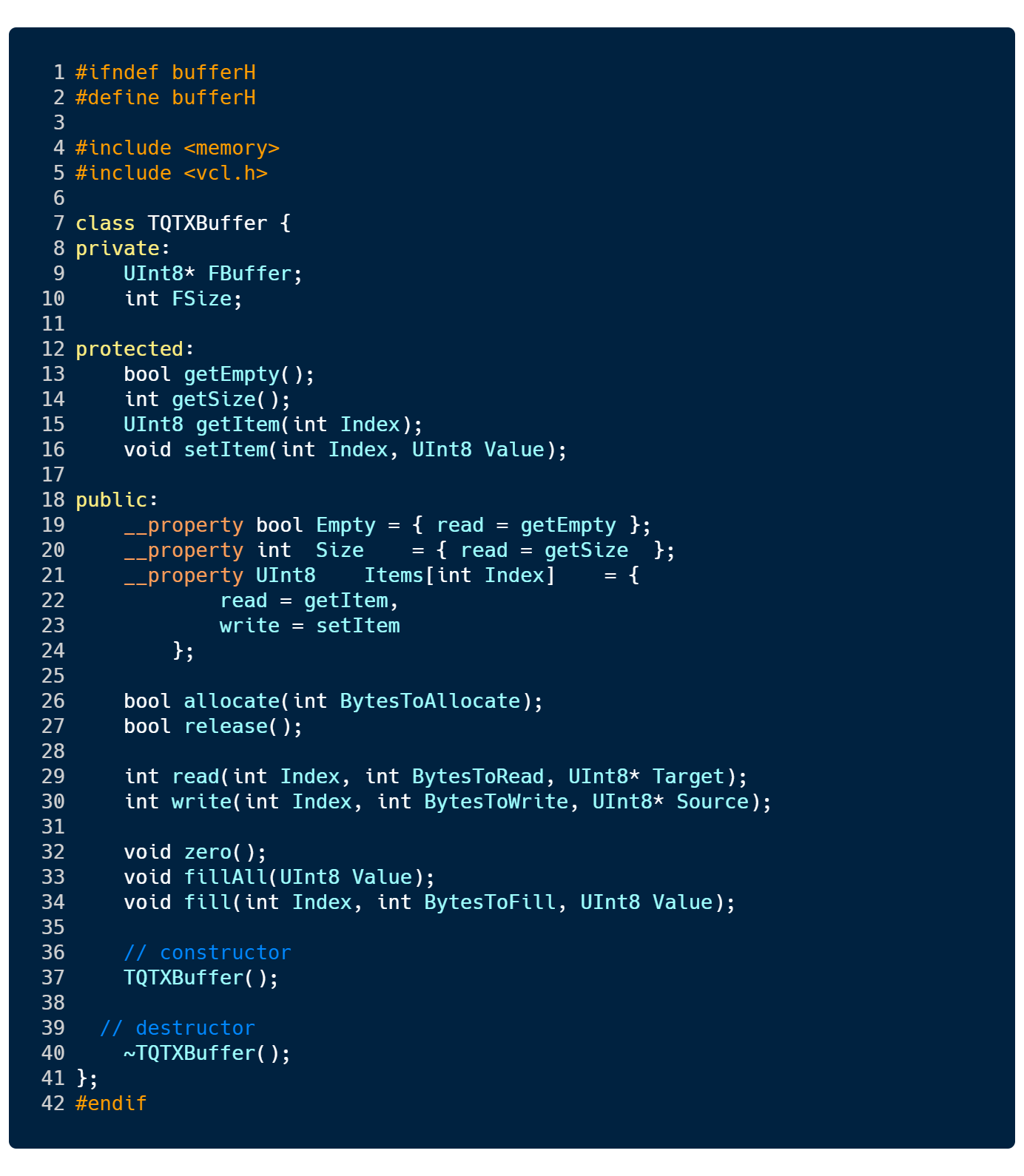

C/C++ porting, QTX and general status

C is a language that I used to play around with a lot back in the Amiga days. I think the last time I used a C compiler to write a library must have been in 1992 or something like that? I held on to my Amiga 1200 for as long as i could – but having fallen completely in love with Pascal, I eventually switched to x86 and went down the Turbo Pascal road.

Lately however, C++ developers have been asking for their own Developer group on Facebook. I run several groups on Facebook in the so-called “developer” family. So you have Delphi Developer, FPC Developer, Node.JS Developer and now – C++Builder developer. The groups more or less tend to themselves, and the node.js and FPC groups are presently being seeded (meaning, that the member count is being grown for a period).

The C++Builder group however, is having the same activity level as the Delphi group almost, thanks to some really good developers that post links, tips and help solve questions. I was also fortunate enough to have David Millington come on the Admin team. David is leading the C++Builder project, so his insight and knowledge of both language and product is exemplary. Just like Jim McKeeth, he is a wonderful resource for the community and chime in with answers to tricky questions whenever he has time to spare.

Getting back in the saddle

Having working some 30 years with Pascal and Object Pascal, 25 of those years in Delphi, C/C++ is never far away. I have an article on the subject that i’ve written for the Idera Community website, so I wont dig too deep into that here — but needless to say, Rad Studio consists of two languages: Object Pascal and C/C++, so no matter how much you love either language, the other is never far away.

So I figured it was time for this old dog to learn some new tricks! I have always said that it’s wise to learn a language immediately below and above your comfort zone. So if Delphi is your favorite language, then C/C++ is below you (meaning: more low level and complex). Above you are languages like JavaScript and C#. Learning JavaScript makes strategic sense (or use DWScript to compile Pascal to JavaScript like I do).

When I started out, the immediate language below Object Pascal was never C, but assembler. So for the longest time I turned to assembler whenever I needed a speed boost; graphics manipulation and processing pixels is especially a field where assembly makes all the difference.

But since C++Builder is indeed an integral part of Rad Studio, and Object Pascal and C/C++ so intimately connected (they have evolved side by side), why not enjoy both assembly and C right?

So I decided to jump back into the saddle and see what I could make of it.

C/C++ is not as hard as you think

I’m having a ball writing C/C++, and just like Delphi – you can start where you are.

While I’m not going to rehash the article I have already prepared for the Idera Community pages here, I do want to encourage people to give it a proper try. I have always said that if you know an archetypal language, you can easily pick up other languages, because the archetypal languages will benefit you for a lifetime. This has to do with archetypal languages operating according to how computers really work; as opposed to optimistic languages (a term from the DB work, optimistic locking), also called contextual languages, like C#, Java, JavaScript etc. are based on how human beings would like things to be.

So I now had a chance to put my money where my mouth is.

When I left C back in the early 90s, I never bothered with OOP. I mean, I used C purely for shared libraries anyways, while the actual programs were done in Pascal or a hybrid language called Blitz Basic. The latter compiled to razor sharp machine code, and you could use inline assembly – which I used a lot back then (very few programmers on those machines went without assembler, it was almost given that you could use 68k in some capacity).

Without ruining the article about to be published, I had a great time with C++Builder. It took a few hours to get my bearings, but since both the VCL and FMX frameworks are there – you can approach C/C++ just like you would Object Pascal. So it’s a matter of getting an overview really.

Needless to say, I’ll be porting a fair share of my libraries to C/C++ when I have time (those that makes sense under that paradigme). It’s always good to push yourself and there are plenty of subtle differences that I found useful.

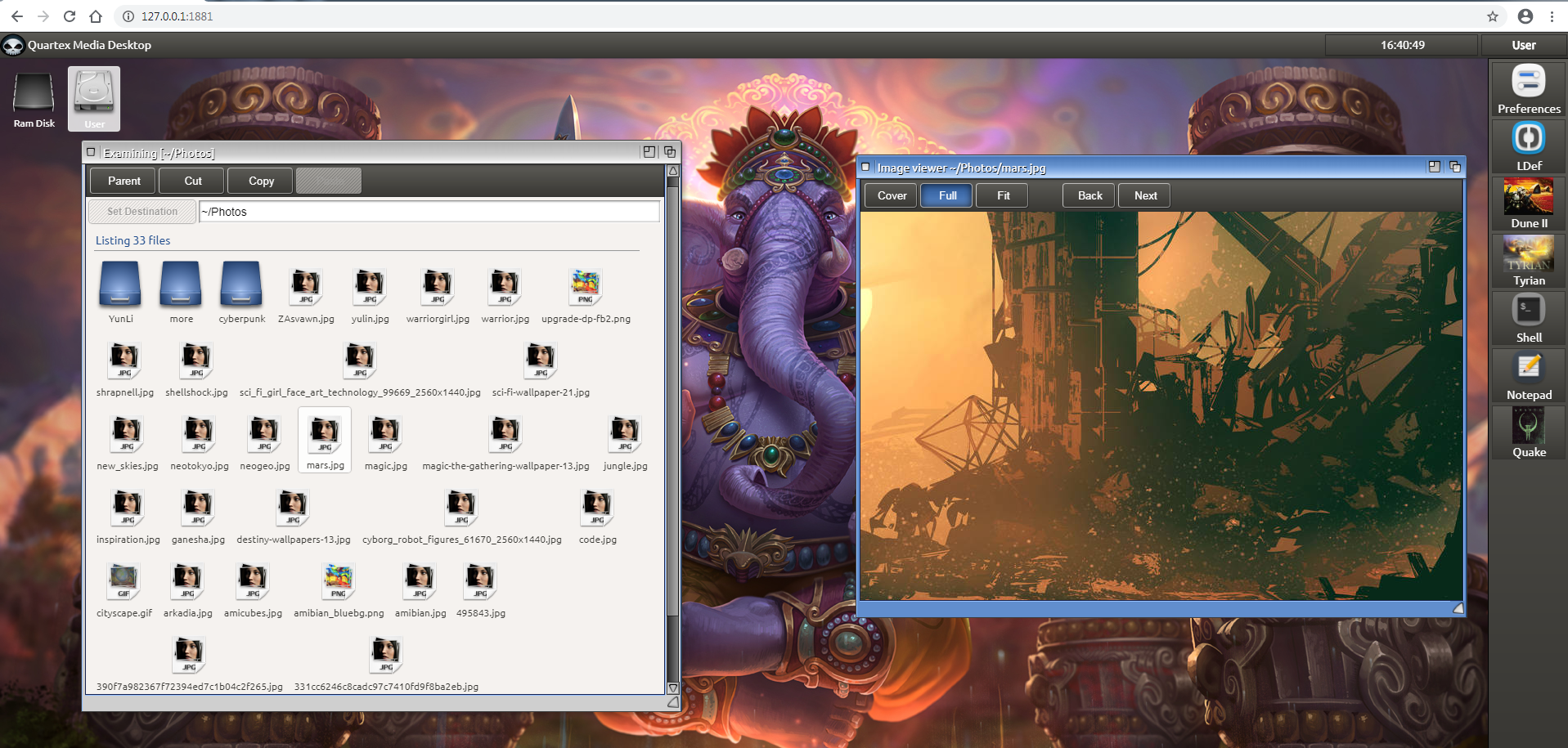

Quartex Media Desktop

When I last wrote about QTX we were nearing the completion of the FileSystem and Task Management service. The prototype had all its file-handling directly in the core service (or server) which worked just fine — but it was linked to the Smart Pascal RTL. It has taken time to write a new RTL + a full multi-user, platform independent service stack and desktop (phew!) but we are seeing progress!

The QTX Baseline backend services is now largely done

The filesystem service is now largely done! There are a few synchronous calls I want to get rid of, but thankfully my framework has both async and sync variations of all file procedures – so that is now finished.

To make that clearer: first I have to wrap and implement the functionality for the RTL. Once they are in the RTL, I can use those functions to build the service functions. So yeah, it’s been extremely elaborate — but thankfully it’s also become a rich, well organized codebase (both the RTL and the Quartex Media Desktop codebases) – so I think we are ready to get cracking on the core!

The core is still operating with the older API. So our next step is to remove that from the core and instead delegate calls to the filesystem to our new service. So the core will simply be reduced to a post-office or traffic officer if you like. Messages come in from the desktops, and the core delegates the messages to whatever service is in charge of them.

But, this also means that both the core and the desktop must use the new and fancy messages. And this is where I did something very clever.

While I was writing the service, I also write a client class to test (obviously). And the way the core works — means that the same client that the core use to talk to the services — can be used by the desktop as well.

So our work in the desktop to get file-access and drives running again, is to wrap the client in our TQTXDevice ancestor class. The desktop NEVER accesses the API directly. All it knows about are these device drivers (or object instances). Which is how we solve things like DropBox and Google Drive support. The desktop wont have the faintest clue that its using Dropbox, or copying files between a local disk and Google Drive for example — because it only communicates with these device classes.

Recursive stuff

One thing that sucked about node.js function for deleting a folder, is that it’s recursive parameter doesn’t work on Windows or OS X. So I had to implement a full recursive deletefolder routine manually. Not a big thing, but slightly more painful than expected under asynchronous execution. Thankfully, Object Pascal allows for inline defined procedures, so I didn’t have to isolate it in a separate class.

Here is some of the code, a tiny spec compared to the full shabam, but it gives you an idea of what life is like under async conditions:

unit service.file.core;

interface

{.$DEFINE DEBUG}

const

CNT_PREFS_DEFAULTPORT = 1883;

CNT_PREFS_FILENAME = 'QTXTaskManager.preferences.ini';

CNT_PREFS_DBNAME = 'taskdata.db';

CNT_ZCONFIG_SERVICE_NAME = 'TaskManager';

uses

qtx.sysutils,

qtx.json,

qtx.db,

qtx.logfile,

qtx.orm,

qtx.time,

qtx.node.os,

qtx.node.sqlite3,

qtx.node.zconfig,

qtx.node.cluster,

qtx.node.core,

qtx.node.filesystem,

qtx.node.filewalker,

qtx.fileapi.core,

qtx.network.service,

qtx.network.udp,

qtx.inifile,

qtx.node.inifile,

NodeJS.child_process,

ragnarok.types,

ragnarok.Server,

ragnarok.messages.base,

ragnarok.messages.factory,

ragnarok.messages.network,

service.base,

service.dispatcher,

service.file.messages;

type

TQTXTaskServiceFactory = class(TMessageFactory)

protected

procedure RegisterIntrinsic; override;

end;

TQTXFileWriteCB = procedure (TagValue: variant; Error: Exception);

TQTXFileStateCB = procedure (TagValue: variant; Error: Exception);

TQTXUnRegisterLocalDeviceCB = procedure (TagValue: variant; DiskName: string; Error: Exception);

TQTXRegisterLocalDeviceCB = procedure (TagValue: variant; LocalPath: string; Error: Exception);

TQTXFindDeviceCB = procedure (TagValue: variant; Device: JDeviceInfo; Error: Exception);

TQTXGetDisksCB = procedure (TagValue: variant; Devices: JDeviceList; Error: Exception);

TQTXGetFileInfoCB = procedure (TagValue: variant; LocalName: string; Info: JStats; Error: Exception);

TQTXGetTranslatePathCB = procedure (TagValue: variant; Original, Translated: string; Error: Exception);

TQTXCheckDevicePathCB = procedure (TagValue: variant; PathName: string; Error: Exception);

TQTXServerExecuteCB = procedure (TagValue: variant; Data: string; Error: Exception);

TQTXTaskService = class(TRagnarokService)

private

FPrefs: TQTXIniFile;

FLog: TQTXLogEmitter;

FDatabase: TSQLite3Database;

FZConfig: TQTXZConfigClient;

FRegHandle: TQTXDispatchHandle;

FRegCount: integer;

procedure HandleGetDevices(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleGetDeviceByName(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleCreateLocalDevice(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleDestroyDevice(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleFileRead(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleFileReadPartial(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleGetFileInfo(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleFileDelete(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleFileWrite(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleFileWritePartial(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleFileRename(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleGetDir(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleMkDir(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure HandleRmDir(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage);

procedure ExecuteExternalJS(Params: array of string;

TagValue: variant; const CB: TQTXServerExecuteCB);

procedure SendError(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage; Message: string);

protected

function GetFactory: TMessageFactory; override;

procedure SetupPreferences(const CB: TRagnarokServiceCB);

procedure SetupLogfile(LogFileName: string;const CB: TRagnarokServiceCB);

procedure SetupDatabase(const CB: TRagnarokServiceCB);

procedure ValidateLocalDiskName(TagValue: variant; Username, DeviceName: string; CB: TQTXCheckDevicePathCB);

procedure RegisterLocalDevice(TagValue: variant; Username, DiskName: string; CB: TQTXRegisterLocalDeviceCB);

procedure UnRegisterLocalDevice(TagValue: variant; UserName, DiskName:string; CB: TQTXUnRegisterLocalDeviceCB);

procedure GetDevicesForUser(TagValue: variant; UserName: string; CB: TQTXGetDisksCB);

procedure FindDeviceByName(TagValue: variant; UserName, DiskName: string; CB: TQTXFindDeviceCB);

procedure FindDeviceByType(TagValue: variant; UserName: string; &Type: JDeviceType; CB: TQTXGetDisksCB);

procedure GetTranslatedPathFor(TagValue: variant; Username, FullPath: string; CB: TQTXGetTranslatePathCB);

procedure GetFileInfo(TagValue: variant; UserName: string; FullPath: string; CB: TQTXGetFileInfoCB);

procedure SetupTaskTable(const TagValue: variant; const CB: TRagnarokServiceCB);

procedure SetupOperationsTable(const TagValue: variant; const CB: TRagnarokServiceCB);

procedure SetupDeviceTable(const TagValue: variant; const CB: TRagnarokServiceCB);

procedure AfterServerStarted; override;

procedure BeforeServerStopped; override;

procedure Dispatch(Socket: TNJWebSocketSocket; Message: TQTXBaseMessage); override;

public

property Preferences: TQTXIniFile read FPrefs;

property Database: TSQLite3Database read FDatabase;

procedure SetupService(const CB: TRagnarokServiceCB);

constructor Create; override;

destructor Destroy; override;

end;

implementation

//#############################################################################

// TQTXFileenticationFactory

//#############################################################################

procedure TQTXTaskServiceFactory.RegisterIntrinsic;

begin

writeln("Registering task interface");

&Register(TQTXFileGetDeviceListRequest);

&Register(TQTXFileGetDeviceByNameRequest);

&Register(TQTXFileCreateLocalDeviceRequest);

&Register(TQTXFileDestroyDeviceRequest);

&Register(TQTXFileReadPartialRequest);

&Register(TQTXFileReadRequest);

&Register(TQTXFileWritePartialRequest);

&Register(TQTXFileWriteRequest);

&Register(TQTXFileDeleteRequest);

&Register(TQTXFileRenameRequest);

&Register(TQTXFileInfoRequest);

&Register(TQTXFileDirRequest);

&Register(TQTXMkDirRequest);

&Register(TQTXRmDirRequest);

&Register(TQTXFileRenameRequest);

&Register(TQTXFileDirRequest);

end;

//#############################################################################

// TQTXTaskService

//#############################################################################

constructor TQTXTaskService.Create;

begin

inherited Create;

FPrefs := TQTXIniFile.Create();

FLog := TQTXLogEmitter.Create();

FDatabase := TSQLite3Database.Create(nil);

FZConfig := TQTXZConfigClient.Create();

FZConfig.Port := 2292;

self.OnUserSignedOff := procedure (Sender: TObject; Username: string)

begin

WriteToLogF("We got a service signal! User [%s] has signed off completely", [Username]);

end;

MessageDispatch.RegisterMessage(TQTXFileGetDeviceListRequest, @HandleGetDevices);

MessageDispatch.RegisterMessage(TQTXFileGetDeviceByNameRequest, @HandleGetDeviceByName);

MessageDispatch.RegisterMessage(TQTXFileCreateLocalDeviceRequest, @HandleCreateLocalDevice);

MessageDispatch.RegisterMessage(TQTXFileDestroyDeviceRequest, @HandleDestroyDevice);

MessageDispatch.RegisterMessage(TQTXFileReadRequest, @HandleFileRead);

MessageDispatch.RegisterMessage(TQTXFileReadPartialRequest, @HandleFileReadPartial);

MessageDispatch.RegisterMessage(TQTXFileWriteRequest, @HandleFileWrite);

MessageDispatch.RegisterMessage(TQTXFileWritePartialRequest, @HandleFileWritePartial);

MessageDispatch.RegisterMessage(TQTXFileInfoRequest, @HandleGetFileInfo);

MessageDispatch.RegisterMessage(TQTXFileDeleteRequest, @HandleFileDelete);

MessageDispatch.RegisterMessage(TQTXMkDirRequest, @HandleMkDir);

MessageDispatch.RegisterMessage(TQTXRmDirRequest, @HandleRmDir);

MessageDispatch.RegisterMessage(TQTXFileRenameRequest, @HandleFileRename);

MessageDispatch.RegisterMessage(TQTXFileDirRequest, @HandleGetDir);

end;

destructor TQTXTaskService.Destroy;

begin

// decouple logger from our instance

self.logging := nil;

// Release prefs + log

FPrefs.free;

FLog.free;

FZConfig.free;

inherited;

end;

procedure TQTXTaskService.SendError(Socket: TNJWebSocketSocket; Request: TQTXBaseMessage; Message: string);

begin

var reply := TQTXErrorMessage.Create(request.ticket);

try

reply.Code := CNT_MESSAGE_CODE_ERROR;

reply.Routing.TagValue := Request.Routing.TagValue;

reply.Response := Message;

if Socket.ReadyState = rsOpen then

begin

try

Socket.Send( reply.Serialize() );

except

on e: exception do

WriteToLog(e.message);

end;

end else

WriteToLog("Failed to dispatch error, socket is closed error");

finally

reply.free;

end;

end;

procedure TQTXTaskService.ExecuteExternalJS(Params: array of string;

TagValue: variant; const CB: TQTXServerExecuteCB);

begin

var LTask: JChildProcess;

var lOpts := TVariant.CreateObject();

lOpts.shell := false;

lOpts.detached := true;

Params.insert(0, '--no-warnings');

// Spawn a new process, this creates a new shell interface

try

LTask := child_process().spawn('node', Params, lOpts );

except

on e: exception do

begin

if assigned(CB) then

CB(TagValue, e.message, e);

exit;

end;

end;

// Map general errors on process level

LTask.on('error', procedure (error: variant)

begin

{$IFDEF DEBUG}

writeln("error->" + error.toString());

{$ENDIF}

WriteToLog(error.toString());

if assigned(CB) then

CB(TagValue, "", nil);

end);

// map stdout so we capture the output